I led the design of this project from June 2015 to Feb 2016 on behalf of Intel. Collaborated with Frima for visual design and implementation.

This application uses Intel® Realsense™ technology, providing real-time scene perception and analysis, which allowed us to calculate depth and distance within the viewfinder in real time.

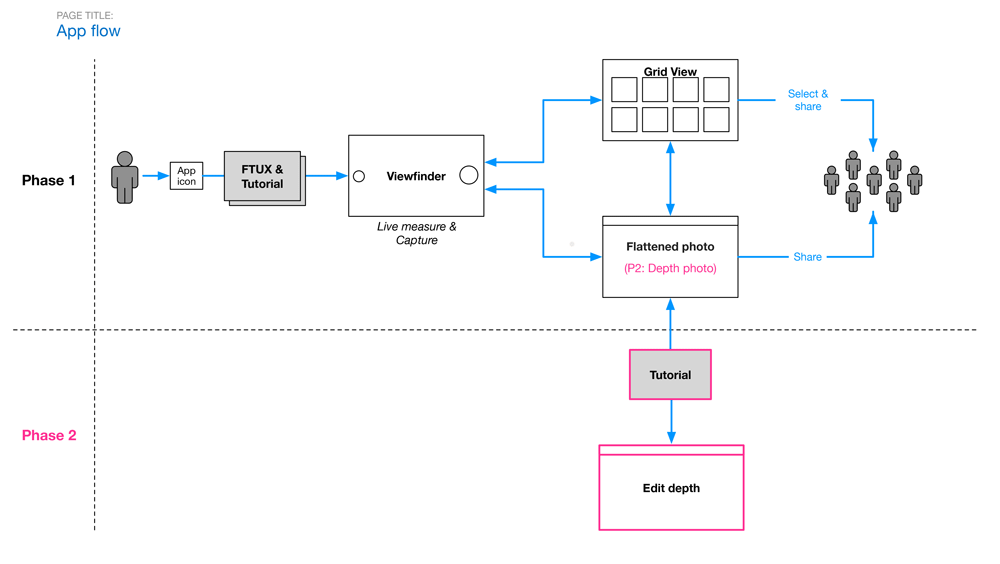

Working alongside project tech lead, we narrowed down the scope of work and focused on two main usage flows:

(1) a person who needs to measure something now and

(2)

a person who want to record a measurement to view later.

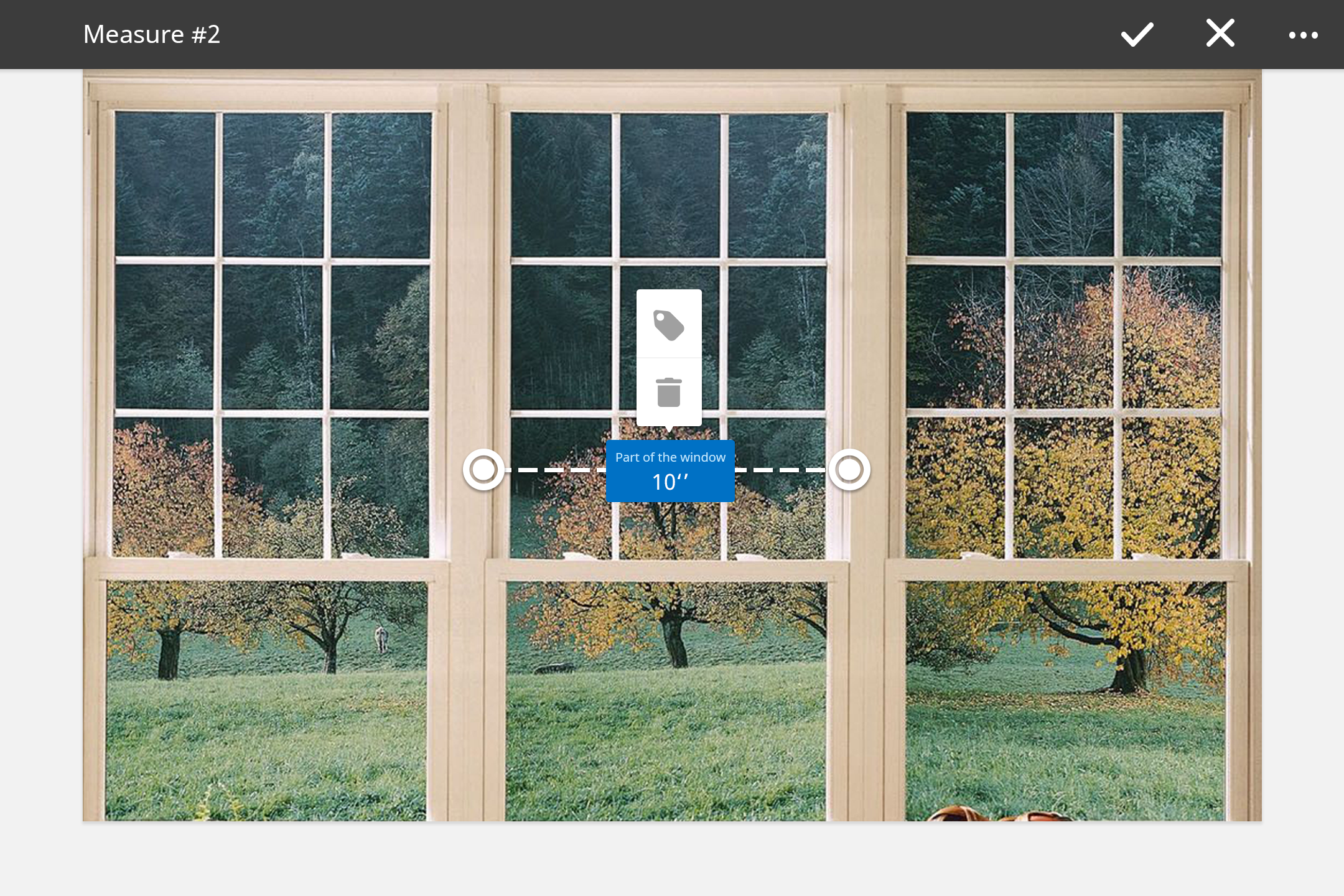

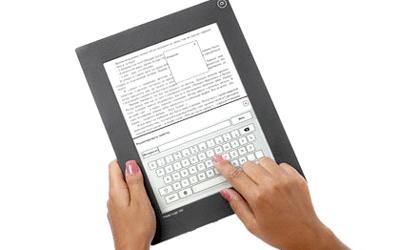

Prior to the final Measure It application, we created the first version of the Measurement App for the Dell Venue 8 7000 tablet - using stereoscopic cameras. The cameras allowed us to think about photography in a new way -- letting us extract actionable data from a static image. This was the measurement app concept.

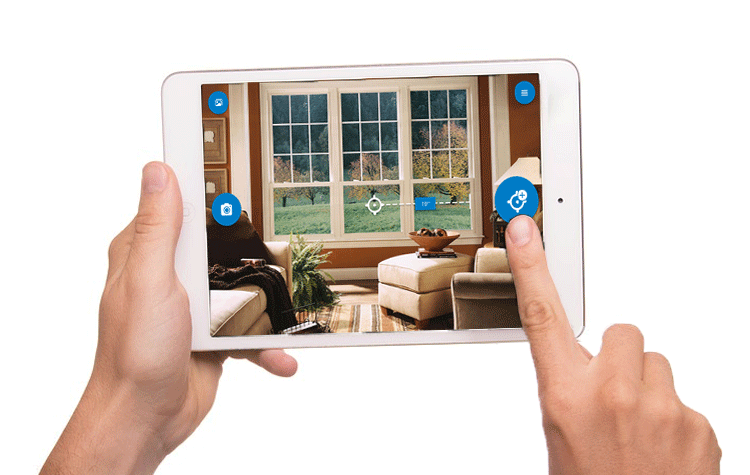

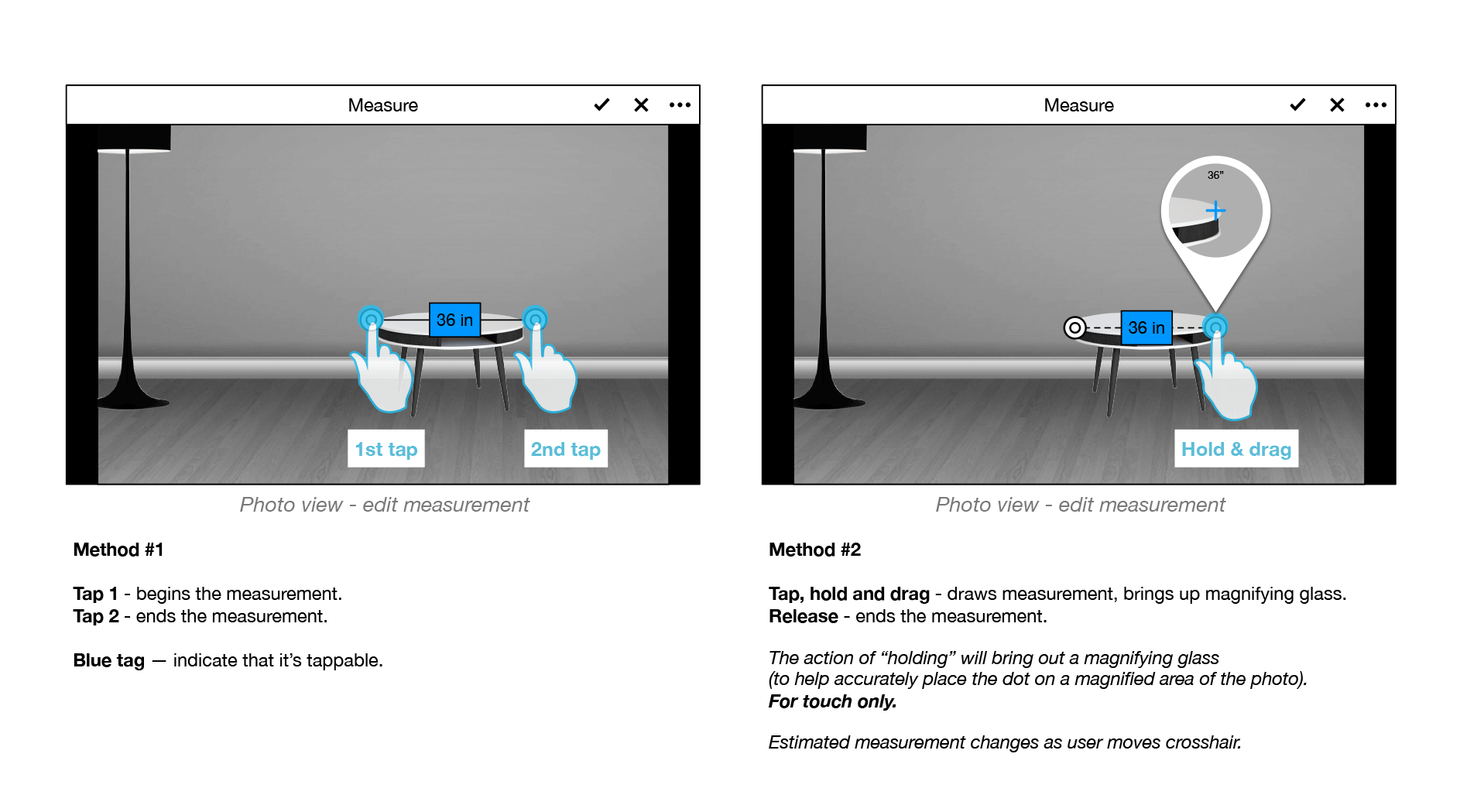

Through initial behavior questions and observations - we allowed users to draw their measurements on their photos in 2 ways:

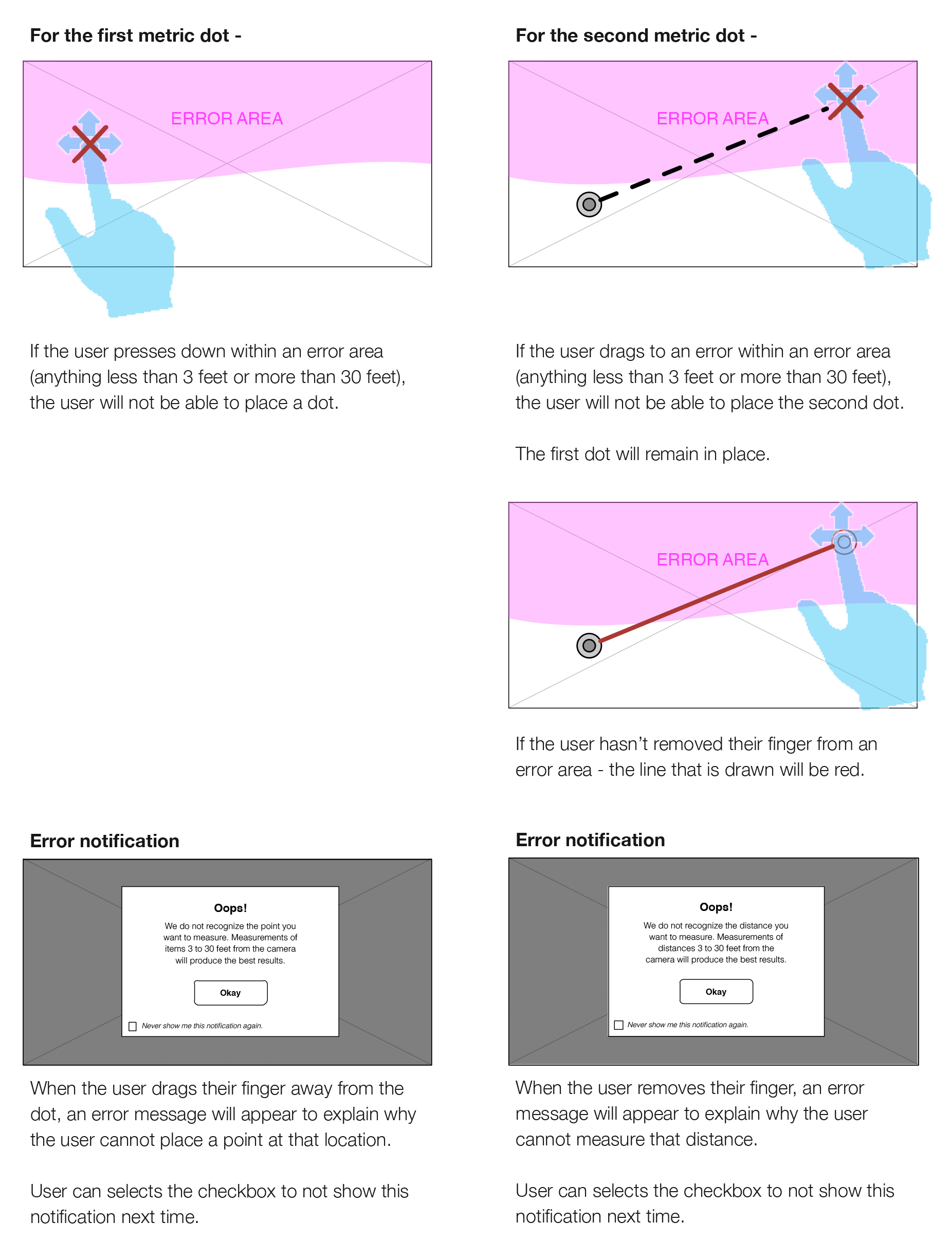

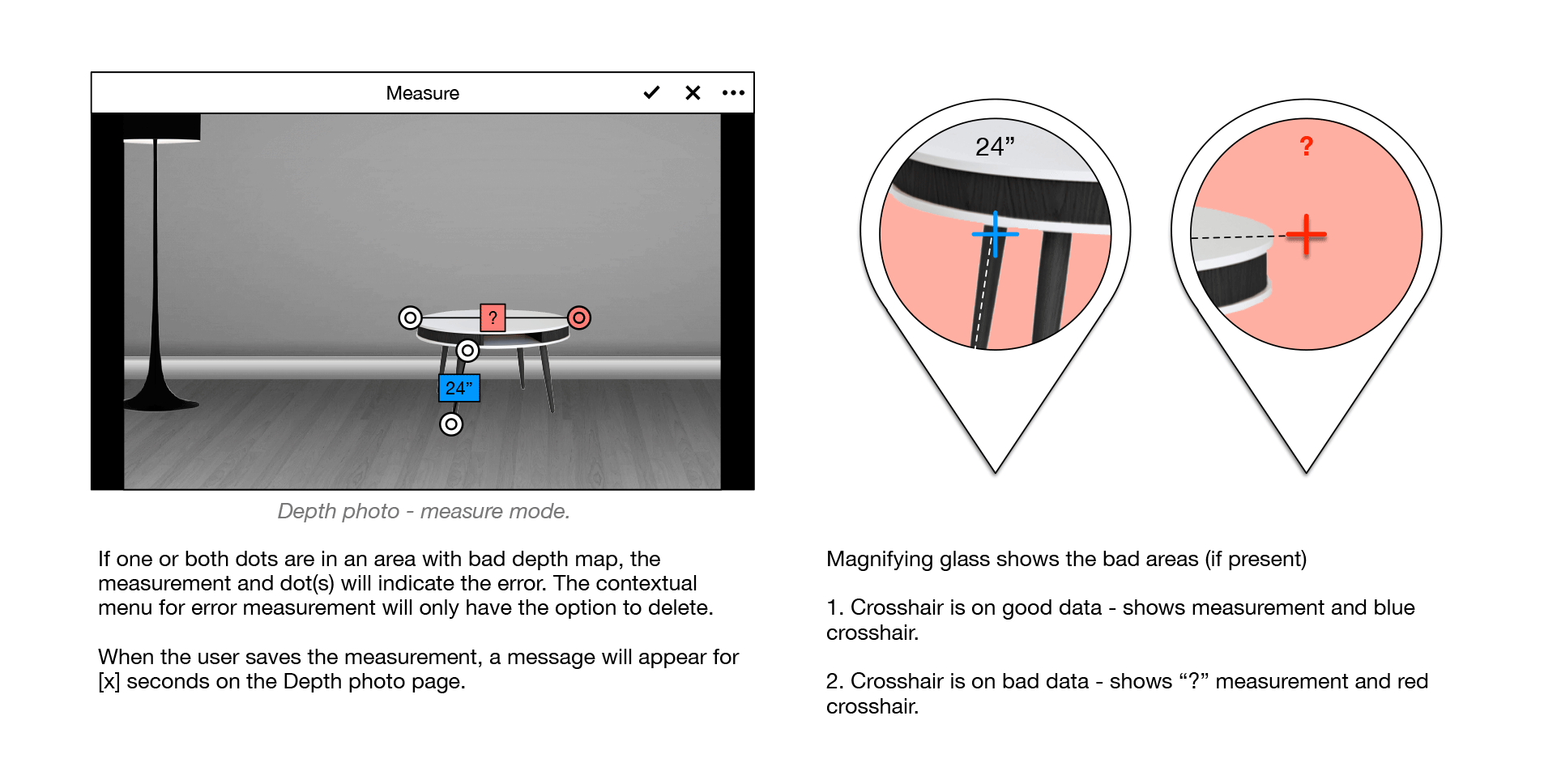

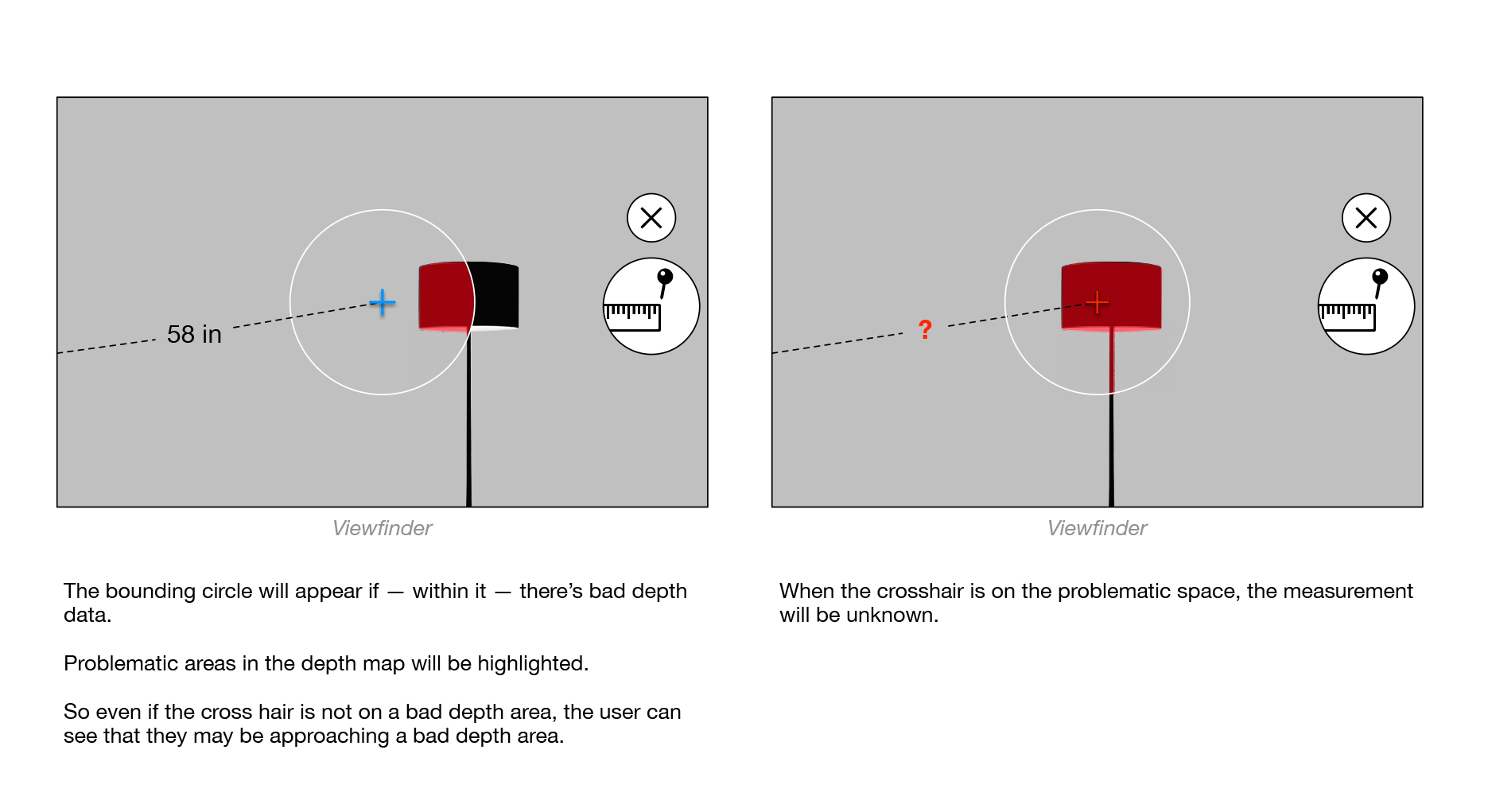

For still photos, we needed a way to indicate to users what can be measured - and what cannot get a measurement. An error occured when the captured depth map had bad data (object is too close, too far away, etc.). Our first version allowed the users to see where all the bad data occurred on the photo.

The thinking behind showing all error was to teach users what type of content will not contain depth information -- for example, the sky. However, after testing this with users - the feeling behind showing all error was too negative and made the product feel like a failure.

So for our second version, we highlighted the dot that is in a bad depth area. We also showed bad depth areas when the magnifying glass appeared - so the error areas were more constrained to a smaller space - and users are able to learn from where their finger is where good data exists.

We conducted an usability study with participants: asking them to perform specific tasks and then asking them to rate the features and quality of experience.

This was a formal testing done in Calabasas, CA, and Woburn, MA. We had a quantitative portion of the tesst, and pulled out 20 individuals for qualitative and deep dive questions.

We learned that despite it being a photography application - people wanted and expected 100% accuracy because they were viewing it more as an utility tool. Because of this expectation, people found the experience of having to capture a photo first, measure later to be difficult and not an intuitive way to measure objects.

We also learned that people needed more feedback when measurements were being created. The Dell tablet did not incorporate a haptic feedback so we improvised and tried it with sound vibrations. The downside of this is that the volumn needed to on max to feel the vibration.

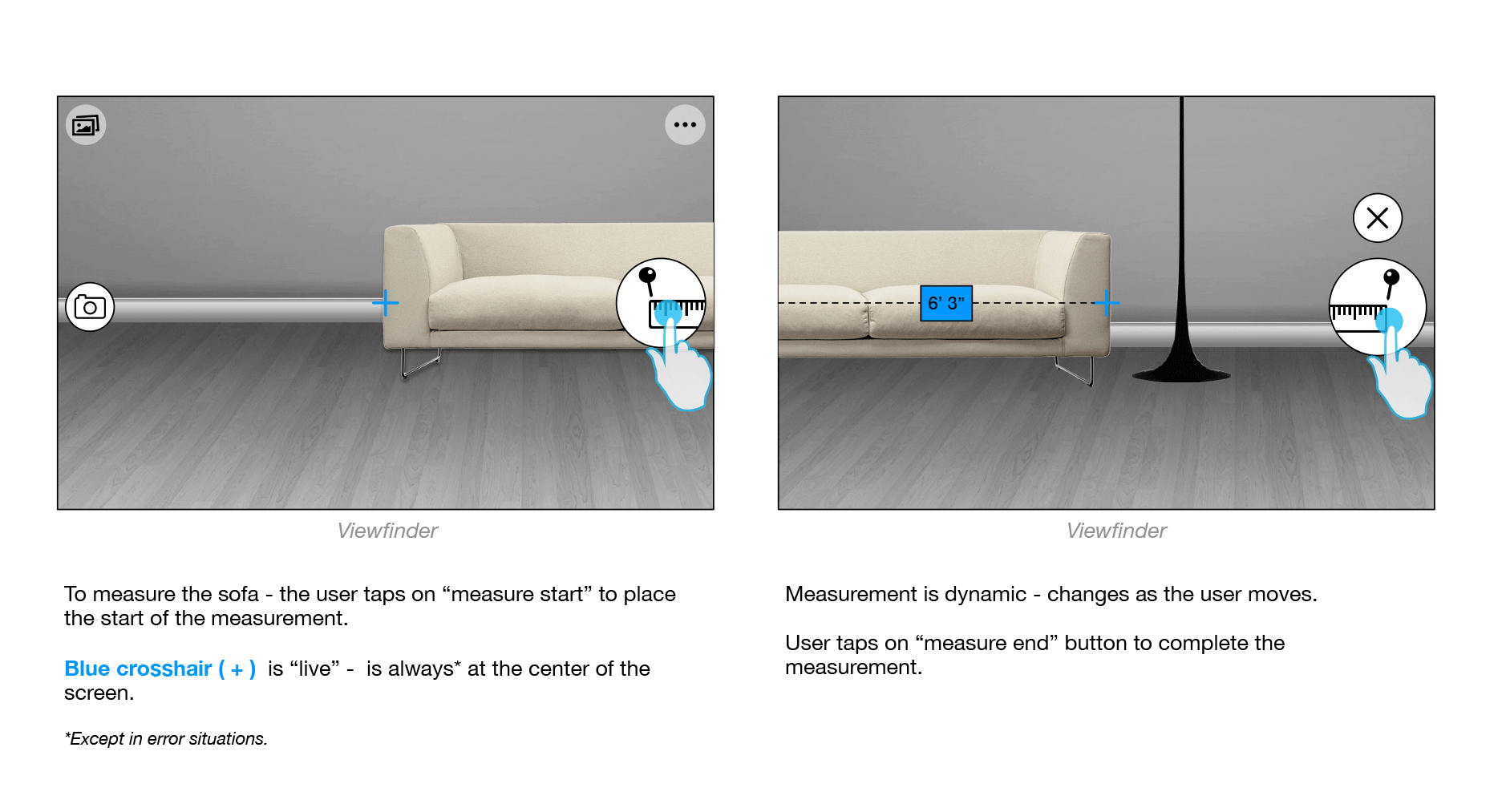

As we continued improvements on the measurement application, we were introduced to RealSense technology with gave us more powerful processing power - which also means faster processing. This allowed the ability to capture data much faster - which gave us Live measurement capabilities. This addressed some of the initial concerns about measuring larger objects, and measuring first, capture later.

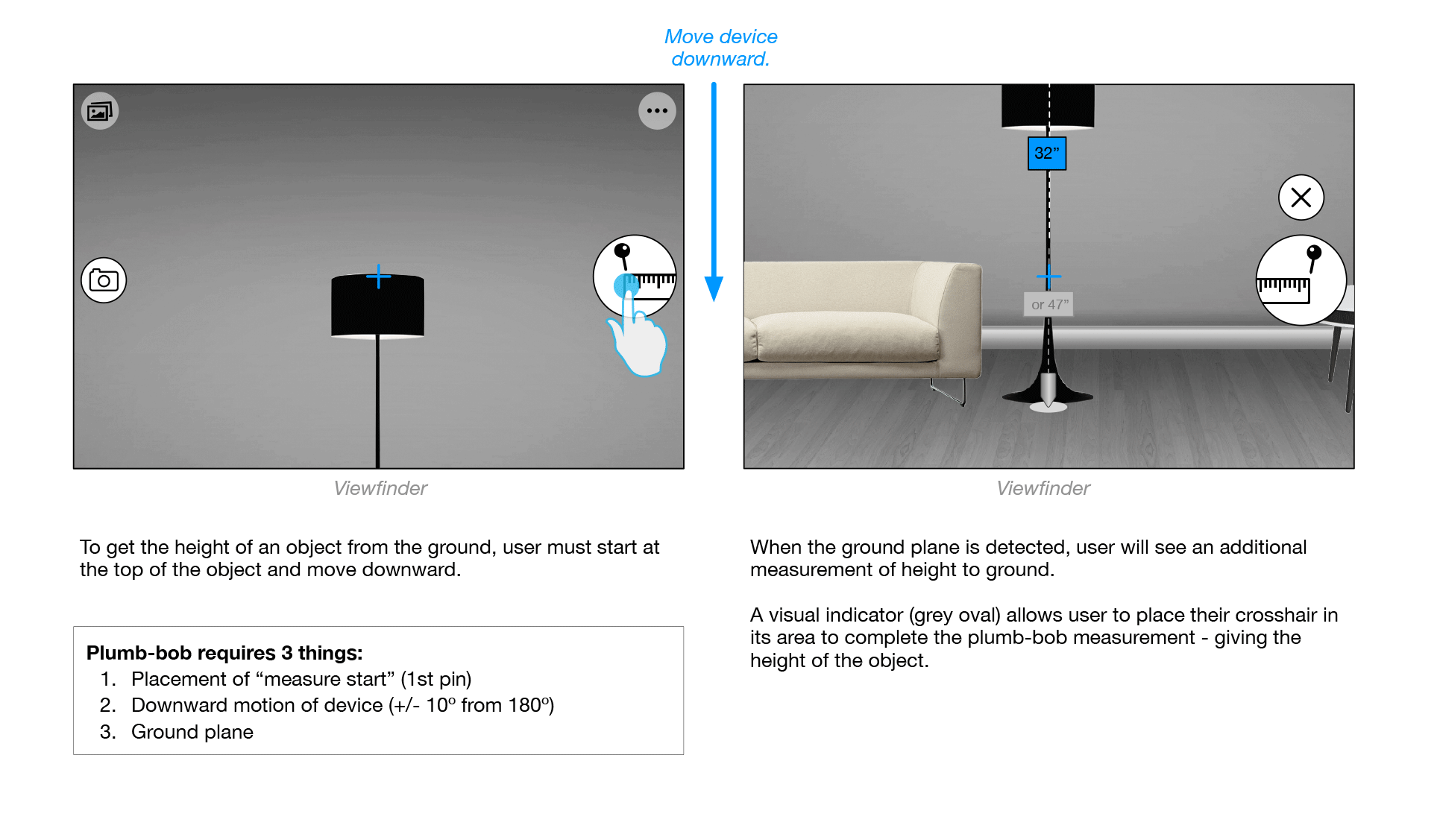

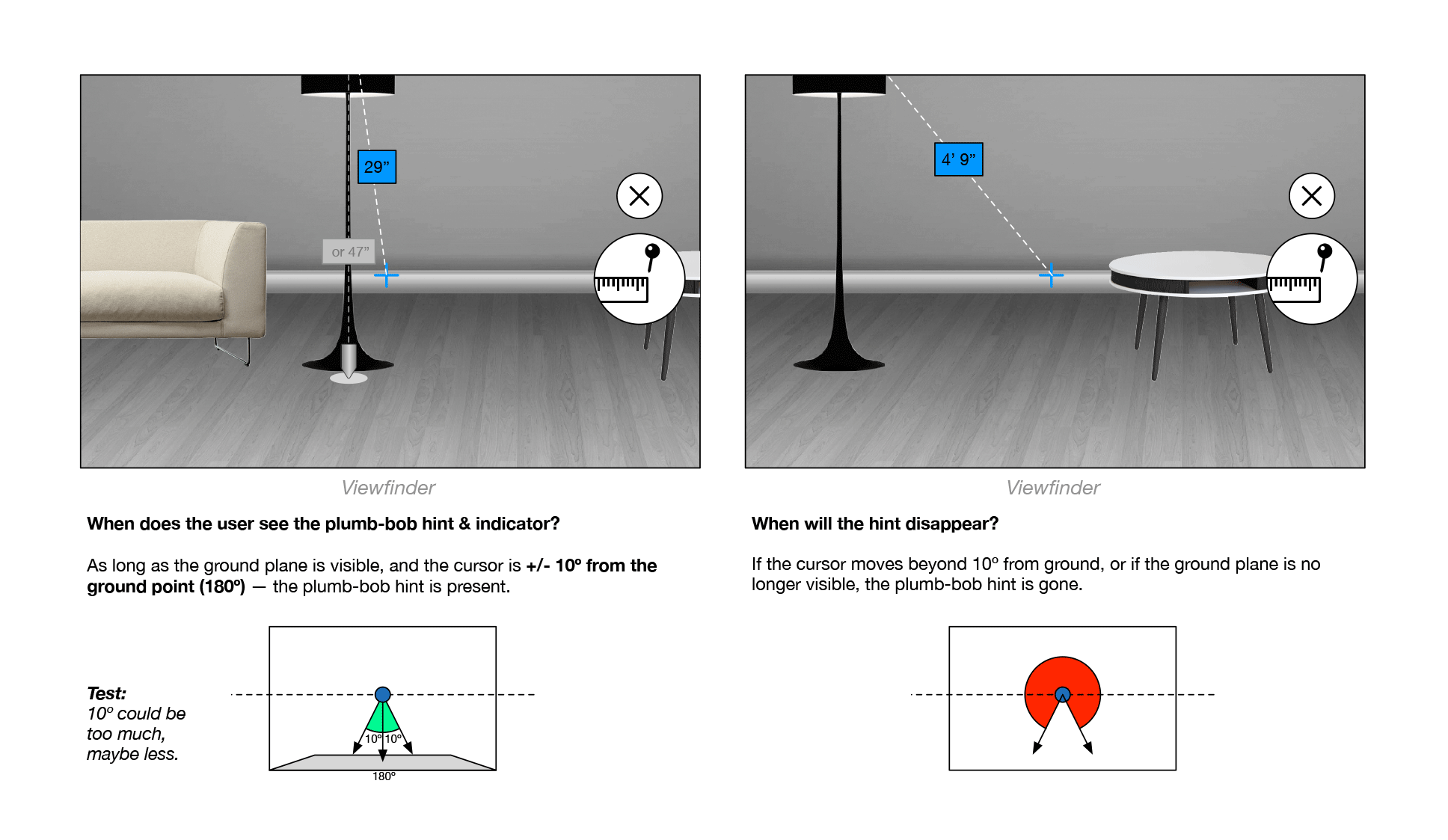

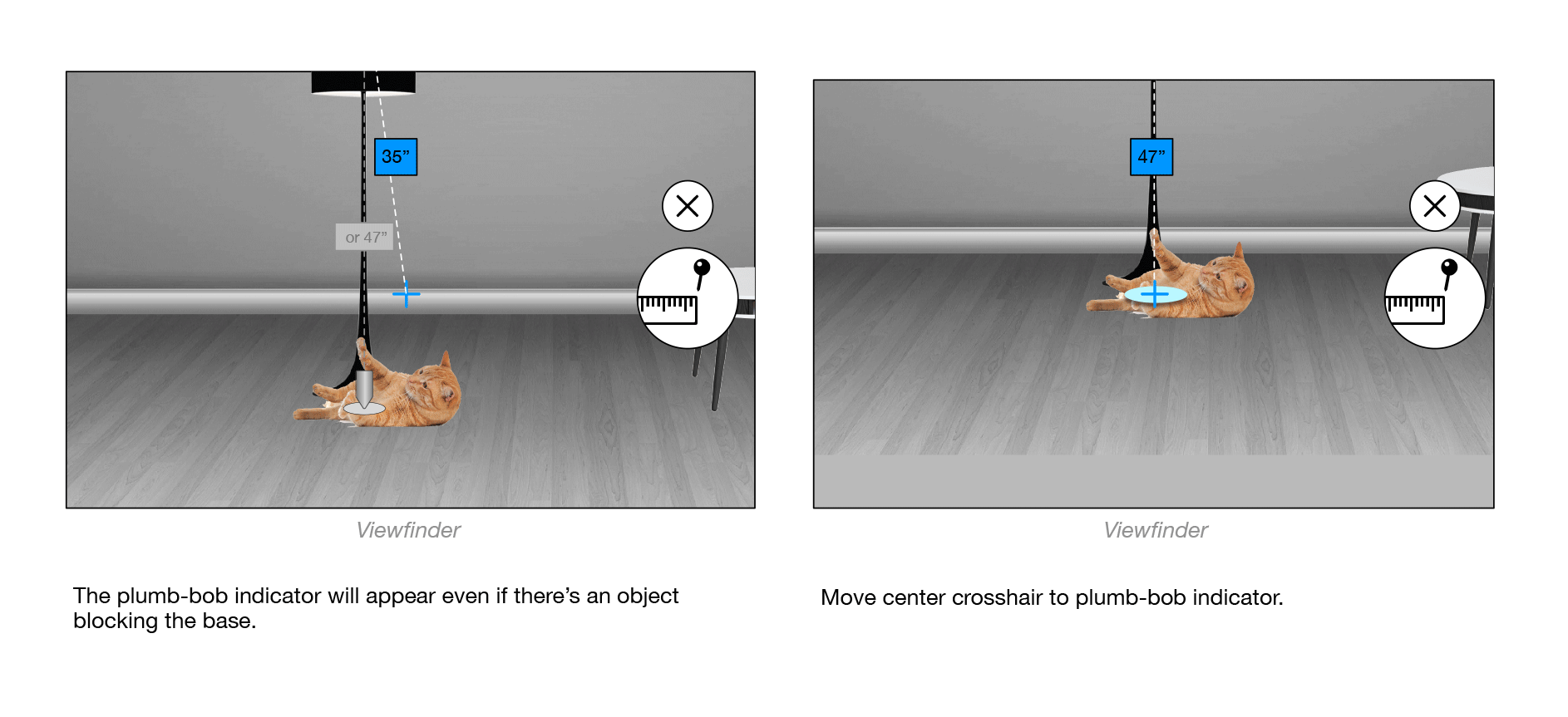

One of the application highlights is the "plumb-bob" height measurement feature. We discovered that when given a working prototype of this app to testing groups, one of the first things people wanted to measure was a person's height (on eachother, or taking it home and trying it on their children). As we were observing them using this feature, we found an opportunity to improve the experience by giving them a visual guide - the plumb bob. This feature provides an absolute height to the ground- based on user actions and scene understanding - as well as a current measurement.

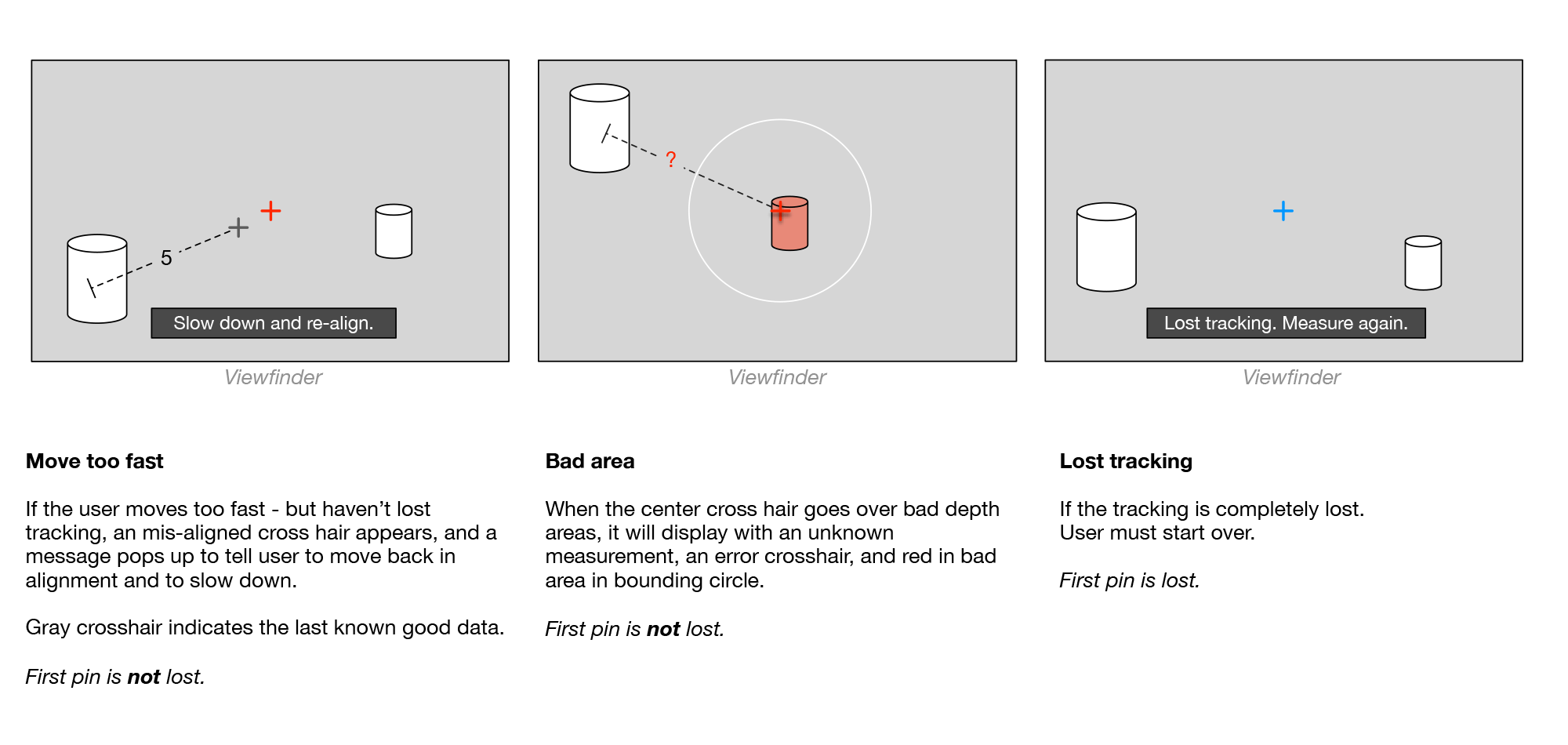

The error scenarios with live measurement also changes. When it was measurements created on a still photo - the only error information we needed to display was bad depth map data. However, with movement and scene analysis, there were additional issues with tracking.

We took what we learned - about not overwhelming the user with error information - and just showed error information in the center of the screen.

YaYa Shang © 2016 Built with love and [googled] html/css | Email me | Linkedin